Meta announced a host of new AI-powered bots, features and products to be released across its messaging apps, the Meta Quest 3 and the future Ray-Ban Meta smart glasses. The new features — ranging from an AI assistant to image editing — harness the power of generative AI to make Meta’s technology just that much more addictive.Although, per Meta’s wording, the new AI experiences and features “give you the tools to be more creative, expressive and productive.”The slew of AI-focused announcements came as part of Meta’s annual Connect conference, where the company announced its newest mixed reality headset and plans to release smart glasses in partnership with Ray-Ban.

All of the AI tech is based on Llama 2, Meta’s new family of open access AI models released in July. The large language model is designed to generate text and code in response to prompts, and it’s trained on a mix of publicly available data, according to Meta. The company noted that Llama 3 would be coming out in 2024.Meta also announced at Connect its new image generator, Emu, which it will use to power things like AI stickers and image editing.Let’s dive into all of the new ways for Meta to use AI.Meta Connect 2023: Everything Revealed in 10 Minutes

AI Assistant, backed by Bing

Meta’s AI Assistant is powered by Llama 2 LLM. Featuring: Ahmad Al-Dahle, VP of GenAI. Image Credits: Meta

Meta’s AI Assistant is designed to give you real-time information and generate photorealistic images from text prompts in just seconds. It can help plan a trip with friends in a group chat, answer general-knowledge questions and search the internet across Microsoft’s Bing to provide real-time web results.

Using Llama 2, Meta said it created specialized datasets anchored in natural conversation so the AIs would respond in a conversational and friendly tone.

“With Meta AI, we saw an opportunity to take this capability and create an assistant that can do more than just write poems,” said Ahmad Al-Dahle, VP of GenAI at Meta, on Wednesday. “Behind Meta AI, we built an orchestrator. And it can seamlessly detect a user’s intent from a prompt and route it to the right extension.”

The first extension will be the web search, powered by Bing, to help with queries that require real-time information.

“Whether you want to know the history of Eggs Benedict, or how to make it, or even where to get it in San Francisco, Meta AI can help ensure you have access to the most up-to-date information through the power of search,” said Al-Dahle.

The AI Assistant will be available in beta in the U.S. on WhatsApp, Messenger and Instagram, and it’s coming soon to Ray-Ban Meta smart glasses and the Quest 3 VR headset.

AI personality chatbots based on celebrities

Each AI personality is based off a real celebrity or influencer. Image Credits: Meta

Meta dropped 28 AI personality characters that are based on famous people, but built entirely from AI, from across the worlds of sports, music, social media and more. You can think of them as topic-specific chatbots who you can message on WhatsApp, Messenger and Instagram. Each personality is based on a celebrity or influencer. For example:

- Football star Tom Brady’s likeness is being used for a character called “Bru” who can talk to you about sports.

- Naomi Osaka, a tennis player, is featured as “Tamika” to talk all things Manga.

- YouTube personality Mr Beast is playing “Zach” to be a…funny guy?

- MMA fighter Israel Adesanya is showing up as Luiz to talk about MMA.

- Model Kendall Jenner is showing up as “Billie” to play the role of a big sister type.

Meta’s AIs were built on the Llama 2 LLM. Most of their knowledge bases are limited to information that largely existed before 2023, but Meta says it hopes to bring its Bing search function to its AIs in the coming months.

The characters won’t just respond via text — they will also be able to talk next year. But for now there is no audio. Any video element you see today and in the future is based on AI-generated animations. Meta filmed the people representing the different AIs and then used “generative techniques” to turn those disparate animations into a cohesive experience.

Meta would not clarify to TechCrunch how it compensated the celebrities for use of their likenesses.

AI Studio for businesses and creators

Meta’s AI studio is available for businesses and creators. Featuring: Angela Fan, research scientist. Image Credits: Meta

Meta’s AI Studio platform will let businesses build AI chatbots for the company’s various messaging services, including Facebook, Instagram and Messenger.

Starting with Messenger, AI Studio will allow companies to “create AIs that reflect their brand’s values and improve customer service experiences,” Meta writes in a post. Onstage, Meta CEO Mark Zuckerberg clarified that the use cases Meta envisions are primarily e-commerce and customer support.

AI Studio will be available in alpha to start, and Meta says that it’ll scale the toolkit further beginning next year.

In the future, creators will be able to tap the AI Studio, as well, to build AIs that “extend their virtual presence” across Meta’s apps. Meta noted that these would have to be sanctioned by the creator and controlled directly by them.

In the coming year, Meta will build a sandbox so that anyone can experiment with creating their own AI, something that Meta will bring to the metaverse.

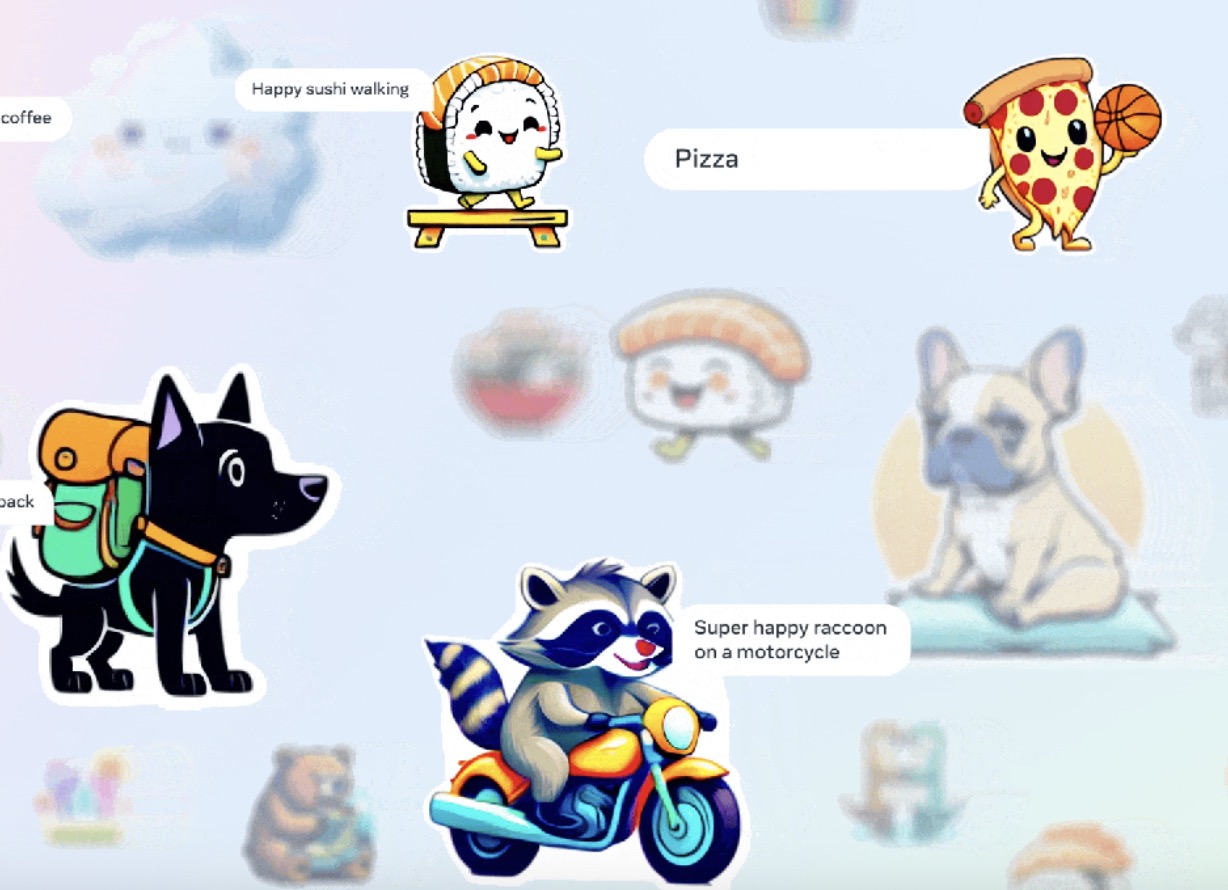

AI stickers, powered by Emu

Image Credits: Meta

CEO Mark Zuckerberg announced that generative AI stickers would be coming to Meta’s messaging apps. The feature, which is powered by its new foundational model for image generation, Emu, will allow users to create unique AI stickers in a matter of seconds across Meta apps including WhatsApp, Messenger, Instagram and even Facebook Stories.

“Every day people send hundreds of millions of stickers to express things in chats,” said Zuckerberg. “And every chat is a little bit different and you want to express subtly different emotions. But today we only have a fixed number — but with Emu now you have the ability to just type in what you want,” he said.

To use the stickers, you could type into a text box with exactly what sort of images you want to see. The feature was demoed in WhatsApp, where Zuckerberg showed off crazy ideas like “Hungarian sheep dog driving a 4×4,” for example. Meta says it takes three seconds on average to generate multiple options to share instantly.

The feature will initially be available to English-language users and will begin to roll out over the next month, the company says.

AI image editing — restyle photos, add a backdrop

Image Credits: Meta

Meta says that soon, you’ll be able to transform your images or co-create AI-generated images with friends. These two new features — restyle and backdrop — are coming soon to Instagram in the U.S., also powered by the technology from Emu.

Restyle lets you reimagine the visual styles of an image — Zuckerberg demoed edits on a photo of his dog, Beast, where he used AI to turn it into origami and cross-stitch styles — by typing in prompts like “watercolor” or even a more detailed prompt such as “collage from magazines and newspapers, torn edges,” Meta explained in a blog post.

Backdrop, meanwhile, changes the scene or background of your image by using prompts.

Meta says the AI images will indicate the use of AI “to reduce the chances of people mistaking them for human-generated content.” The company said it’s also experimenting with forms of visible and invisible markers.

A word on safety

“If you spent any time playing with conversational AI, as you probably know, they have the potential to say things that are inaccurate or even inappropriate. And that can happen with ours, too,” said Al-Dahle.

Al-Dahle described onstage the “thousands of hours” of red-teaming and working with prompts to train its AI assistant and characters to steer clear of iffy topics. Red-teaming is an iterative process where you try to get the model to say harmful things, apply fixes and repeat. Continuously.

Meta says it is also releasing system cards alongside its AIs so people can understand “what’s inside and how they were built.”

Leave a Comment